InstantXR

6DoF Navigation is freely available with the hybrid rendering of cloud-based NeRF.

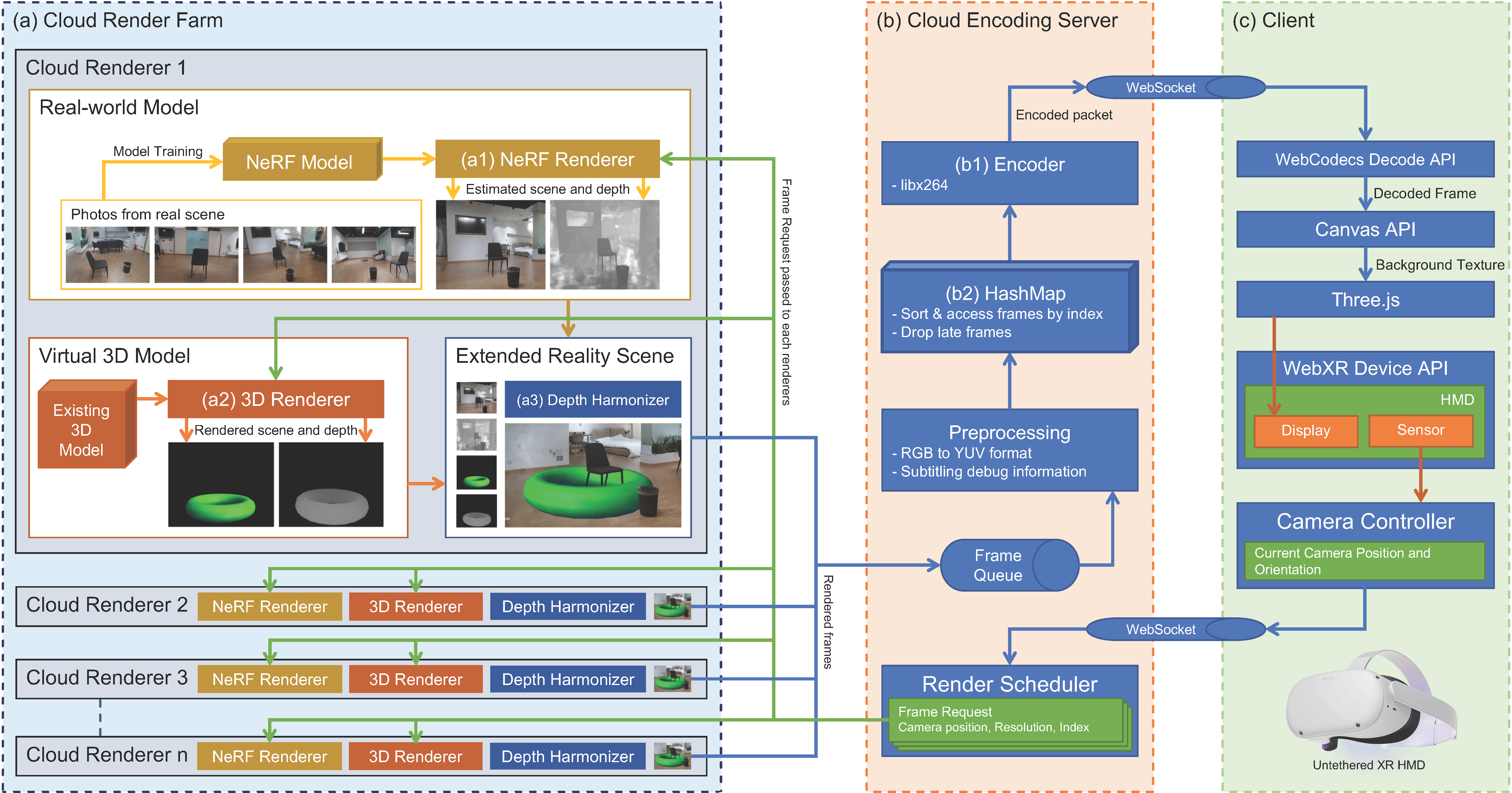

For an XR environment to be used on a real-life task, all contents should be created and delivered where they are required on time. To deliver an XR environment faster and correctly, the time spent on modeling should be considerably reduced or eliminated. In this paper, we propose a hybrid method that fuses the conventional method of rendering 3D assets with the neural radiance fields (NeRF) technology, which uses photographs to create and display an instantly generated XR environment in real-time, without a modeling process. Although NeRF generates a relatively realistic space with less effort, it has disadvantages owing to its high computational complexity. We propose a cloud-based distributed acceleration architecture to reduce computational latency. Furthermore, we implemented an XR streaming structure that can process the input from an XR device in real-time. Consequently, our proposed hybrid method for real-time XR generation using NeRF and 3D graphics is available for lightweight mobile XR clients, such as untethered HMDs. The proposed technology makes it possible to quickly virtualize one location and deliver it to another remote location, thus making virtual sightseeing and remote collaboration more accessible to the public.

6DoF Navigation is freely available with the hybrid rendering of cloud-based NeRF.

Only rotation and zoom are available, but translation is not avalible with conventional 360° video.

We used instant neural graphics primitives from NVlabs for our NeRF implementation. Recent developments of NeRF and neural rendering alike is well documented in this survey paper.

The Webizing Research Lab (WRL) in the Korea Institute of Science and Technology (KIST) is actively researching on the areas of remote collaboration and extended reality. Webized Extended Reality (WXR) platform from WRL uses a LiDAR camera of iPad to create a point cloud to provide background of a scene for remote collaboration.

@inproceedings{10.1145/3564533.3564565,

author = {Park, Moonsik and Yoo, Byounghyun and Moon, Jee Young and Seo, Ji Hyun},

title = {InstantXR: Instant XR Environment on the Web Using Hybrid Rendering of Cloud-Based NeRF with 3D Assets},

year = {2022},

isbn = {9781450399142},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3564533.3564565},

doi = {10.1145/3564533.3564565},

abstract = {For an XR environment to be used on a real-life task, it is crucial all the contents are created and delivered when we want, where we want, and most importantly, on time. To deliver an XR environment faster and correctly, the time spent on modeling should be considerably reduced or eliminated. In this paper, we propose a hybrid method that fuses the conventional method of rendering 3D assets with the Neural Radiance Fields (NeRF) technology, which uses photographs to create and display an instantly generated XR environment in real-time, without a modeling process. While NeRF can generate a relatively realistic space without human supervision, it has disadvantages owing to its high computational complexity. We propose a cloud-based distributed acceleration architecture to reduce computational latency. Furthermore, we implemented an XR streaming structure that can process the input from an XR device in real-time. Consequently, our proposed hybrid method for real-time XR generation using NeRF and 3D graphics is available for lightweight mobile XR clients, such as untethered HMDs. The proposed technology makes it possible to quickly virtualize one location and deliver it to another remote location, thus making virtual sightseeing and remote collaboration more accessible to the public. The implementation of our proposed architecture along with the demo video is available at https://moonsikpark.github.io/instantxr/.},

booktitle = {Proceedings of the 27th International Conference on 3D Web Technology},

articleno = {2},

numpages = {9},

keywords = {NeRF, Neural rendering, Deep learning, Neural radiance fields, Web-based XR, Cloud computing, Virtual reality, XR, Extended reality},

location = {Evry-Courcouronnes, France},

series = {Web3D '22}

}